Welcome back to Healthy Innovations! 👋

This week, I'm highlighting voice biomarker technology - a fundamental shift in patient health monitoring that's passive, continuous, and frictionless. Instead of waiting for patients to report symptoms or schedule appointments, we can detect physiological changes through something as simple as a phone call.

From startups to big tech, let’s take a look at what has got people talking!

What was once the domain of academic curiosity has crossed into commercial reality. Voice biomarker technology is now being integrated into CE-marked diagnostics, pharmaceutical trials, and digital therapeutics platforms. The global market, valued at approximately $600 million in 2024, is projected to reach $3 billion by 2032, growing at roughly 15% annually.

This isn't incremental progress. This is medicine learning to hear what doctors have been missing.

Why voice, why now

Voice offers something wearables can't match: frictionless, hardware-free monitoring that captures physiological shifts in seconds. There's no device to charge, no sensor to wear, no blood to draw. Just speak into your phone.

The economics are compelling.

Heart failure readmissions cost about $50 billion annually in direct U.S. costs. For Parkinson's and depression, speech changes often precede clinical recognition by months - a diagnostic window that could fundamentally transform early intervention.

Regulators are taking notice.

The FDA's Digital Health Center of Excellence is actively evaluating first-in-class submissions for voice-based diagnostics, though no voice biomarker platforms have yet received FDA approval.

Meanwhile, European regulatory bodies have granted CE marks to early market entrants. More than 40 clinical trials are currently active across cardiology, neurology, and mental health applications.

From speech to signal: The technical foundation

The process is deceptively simple.

A patient records 10 to 30 seconds of speech - either reading a scripted phrase or speaking naturally. Digital signal processing algorithms extract over 200 acoustic features: pitch variations, vocal shimmer, jitter, prosody, harmonic-to-noise ratios, and breathing patterns.

Machine learning models map these vocal patterns to physiological or neurological states. The output might be a "congestion score" indicating fluid accumulation in heart failure patients, a "motor-slowness index" flagging Parkinson's progression, or a "mood signature" suggesting depressive episodes.

The most sophisticated platforms are patient-specific, establishing individual baselines over the first week or two, then flagging deviations from that personal norm. This approach dramatically improves sensitivity compared to population-based models.

In practice, the results are striking.

A published study tracking 173 heart failure patients using Cordio Medical's HearO platform detected 10 out of 13 hospitalizations an average of 12.2 days before admission - with patients recording just five sentences daily. That nearly two-week warning window represents the difference between a planned medication adjustment and an emergency room visit.

In a published case study from Kintsugi Health, a mental health practice using their voice analysis technology identified stress and depression levels in patients that were not evident in self-reported assessments - highlighting how voice biomarkers can detect what patients either can't articulate or choose not to report. Kintsugi Health’s technology is designed to meet Class II medical device standards as it pursues FDA De Novo clearance.

The competitive landscape spans multiple therapeutic areas.

Sonde Health has built a cross-condition software development kit (SDK) licensed by Astellas, Biogen, Pfizer, and Qualcomm.

Canary Ambient, from Canary Speech, objectively detects cognitive and behavioral conditions ahead of traditional clinical screening and before observable symptoms - allowing clinicians, payers, and patients to address conditions proactively.

Ellipsis Health has validated its platform against standardized depression and anxiety screening tools.

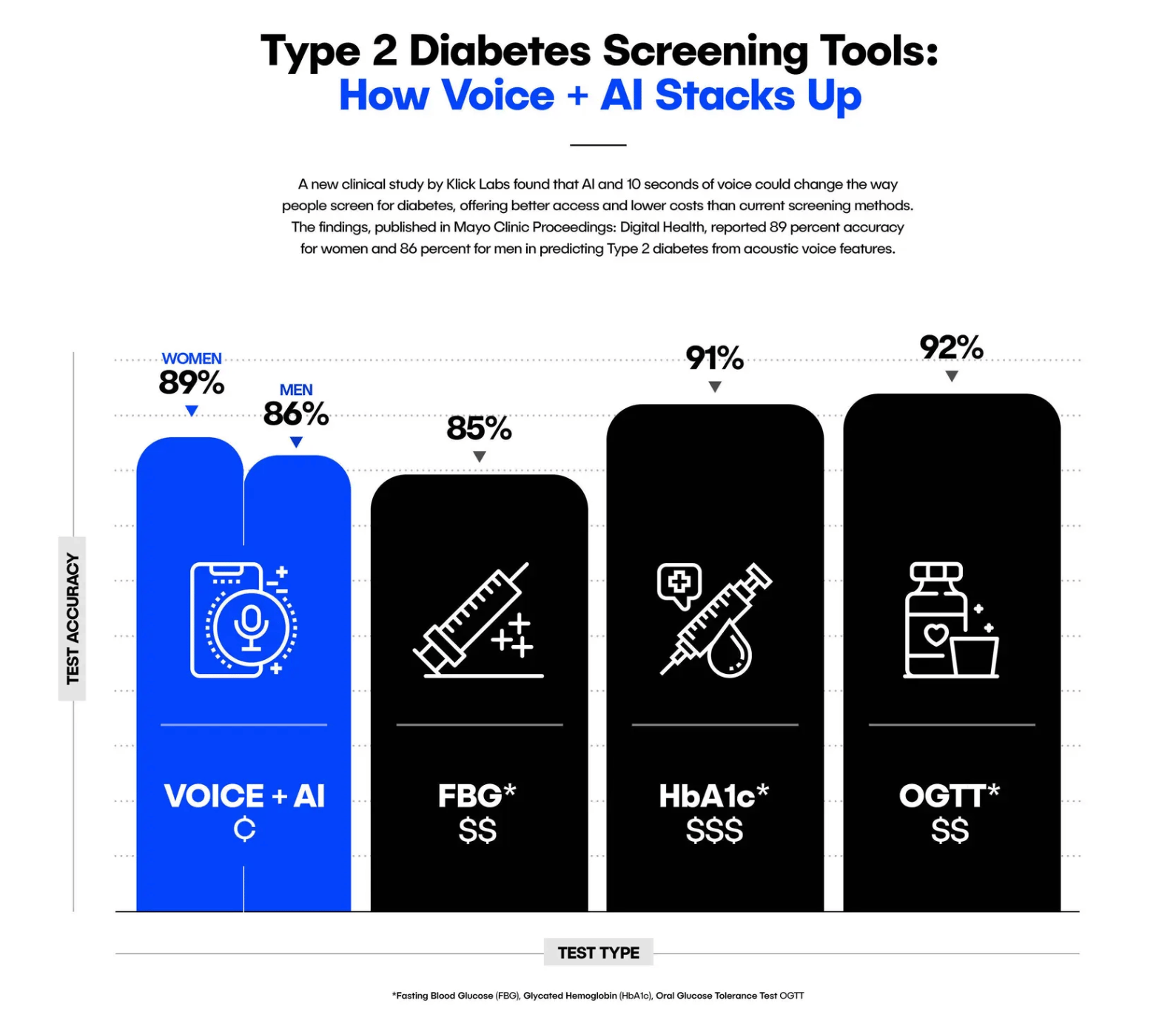

Klick Labs, part of Klick Health, is carving out a distinct niche in metabolic and cardiovascular conditions. Their October 2023 study demonstrated Type 2 diabetes detection from just 6-10 seconds of voice with 89% accuracy for women and 86% for men. They've since published research linking blood glucose levels directly to voice pitch and detecting chronic hypertension with up to 84% accuracy.

The emerging frontier involves multi-modal fusion - combining voice with smartwatch vitals, facial micro-expressions, or respiratory acoustics. Early research suggests these integrated approaches could achieve diagnostic specificity above 90% by 2027.

The hard problems haven't been solved

Signal noise remains persistent. Background chatter, poor microphone quality, and varying acoustic environments can skew spectral data. Active noise filtering and environmental calibration are ongoing engineering bottlenecks.

Regulatory pathfinding presents unique complexities. Few harmonized frameworks exist for algorithm updates post-approval - critical for machine learning systems that improve over time. How do regulators handle continuous learning models that evolve after market authorization? The answer is still being written.

Data privacy concerns are heightened because voice is biometric data. Under GDPR and HIPAA, voice recordings require stringent protections. Leading companies are investing in on-device processing and differential privacy techniques, but these add technical complexity and computational overhead.

Clinical workflow integration will determine adoption rates. Physicians are already drowning in alerts. Another notification stream risks contributing to alert fatigue unless systems incorporate adaptive thresholds and seamless EHR integration.

Bias and inclusivity cannot be ignored. Accents, languages, dialects, and pre-existing vocal disorders affect model performance. Expect multilingual validation requirements and bias testing to become standard regulatory expectations by 2026.

What comes next

By 2028, voice may join pulse, temperature, and blood pressure as a routinely monitored vital sign. Hybrid models combining voice with photoplethysmography and respiratory acoustics are under evaluation at Mayo Clinic and King's College London.

Photoplethysmography (PPG) is a non-invasive optical technique that measures blood volume changes in tissues. It works by shining light (typically green or infrared) through the skin and detecting how much light is absorbed or reflected back - which varies as blood pulses through vessels.

Formal FDA and EMA guidance is likely once three or more voice-based diagnostics achieve market authorization, projected for 2026–2027. Big Tech is circling - Amazon Clinic, Apple ResearchKit, and Google Fit teams have all filed patents for continuous voice health analytics. The pharmaceutical industry sees strategic value as digital endpoints in clinical trials, replacing subjective questionnaires with objective measurements.

Action items for healthcare systems

Hospitals and digital health teams should pilot voice screening in heart failure, Parkinson's, and depression cohorts now. Implementation costs are minimal - essentially storage, consent infrastructure, and integration work - but potential savings are substantial. Each avoided heart failure readmission can offset an entire year of voice monitoring for multiple patients.

The technology isn't perfect, but it's crossing the threshold from research novelty to clinical tool. Early adopters will gain operational experience and contribute to shaping best practices.

Voice biomarkers won't replace cardiologists, neurologists, or psychiatrists. But they might help these specialists focus their expertise where it matters most - on patients showing early signs of deterioration rather than waiting for crisis-level presentations that are harder and more expensive to treat.

The future of medicine might sound different than we expected. And that's precisely the point.

Innovation highlights

🐭 Nano-chemo shows potency. Scientists wrapped chemotherapy in tiny DNA-coated spheres, boosting its effectiveness up to 20,000-fold against leukemia in mice. The nano-particles target cancer cells more precisely than traditional chemo, which damages healthy tissue too. Early results showed no detectable side effects in the animal study. The team plans further testing in animals before potential human trials, pending funding.

⌚ Checking more than time. Researchers developed an AI tool that uses smartwatch ECG sensors to detect structural heart diseases like weakened heart muscles or damaged valves. In a study of 600 people, it accurately identified heart problems 88% of the time. The technology could enable widespread early screening using devices people already own, though researchers note limitations including false positives and need for broader testing.

Company to watch

🩺 Q Bio is reimagining the traditional physical exam with AI and advanced imaging technology. The Redwood City-based company offers the "Q Exam" - a 75-minute noninvasive scan that captures detailed biomarkers across nine biological subsystems without blood draws. The data generates a "digital twin" - a personalized health model that tracks changes over time.

The platform uses AI to analyze multi-modality imaging data and identify trends that might indicate future health risks. This longitudinal approach allows physicians and patients to monitor subtle changes rather than relying on annual snapshots.

Founded by a team of PhDs and MDs, Q Bio operates a flagship clinic in Redwood City and partners with longevity-focused practices. As preventive medicine gains traction, their approach represents a shift from episodic checkups to continuous health monitoring through comprehensive data analytics.

Weird and wonderful

🎬 Black, white, and technicolor dreams. Here's a wild discovery: whether you dream in color depends partly on when you were born. Before the 1960s, most people reported black-and-white dreams. Today, over 80% of us dream in full color.

The culprit? Television. Growing up with color TV fundamentally changed how we experience and recall our dreams. It's not just about what happens during sleep - it's about memory. We tend to forget expected colors (yellow bananas) but vividly remember surprising ones (neon-pink bananas). Colors with personal meaning also stick.

Philosopher Eric Schwitzgebel adds a mind-bending twist: maybe dreams aren't really visual experiences at all. Perhaps they're "indeterminate" - neither colored nor monochrome - and our movie-watching habits simply teach us how to remember them afterward.

Image created using Canva AI

Thank you for reading the Healthy Innovations newsletter!

Keep an eye out for next week’s issue, where I will highlight the healthcare innovations you need to know about.

Have a great week!

Alison ✨

P.S. If you enjoyed reading the Healthy Innovations newsletter, please subscribe so I know the content is valuable to you!

P.P.S. Healthcare is evolving at an unprecedented pace, and your unique insights could be invaluable to others in the field. If you're considering starting your own newsletter to share your expertise and build a community around your healthcare niche, check out beehiiv (affiliate link). There's never been a better time to start sharing your knowledge with the world!